What Is Compositing?

Compositing is the stage of animation and visual effects where all visual elements of a shot are brought together into a final image. Computer-generated imagery, live-action footage, 2D artwork, backgrounds, and practical elements are balanced, aligned, and integrated so they feel like a single, coherent piece of visual storytelling. In professional pipelines, compositing is where creative intent meets technical control.

1. Traditional Compositing: Bringing All Asset Types into Nuke

Nuke has long been the central point where disparate asset types are combined into a final image. A typical comp may include rendered CG passes, 2D artwork, matte paintings, green-screen photography, background plates, projections, and practical elements. Each asset arrives with its own colour space, resolution, motion characteristics, and technical quirks. Nuke’s node-based workflow allows compositors to normalise these inputs, align them in space and time, and integrate them through roto, keying, tracking, grading, relighting, and grain management. This approach is built around predictability and control: assets are prepared upstream, then shaped and blended in Nuke to produce a cohesive, shot-ready result.

2. ComfyUI: ComfyUI: Powerful Tools, Production Trade-Offs

ComfyUI brings similar node-based logic to Stable Diffusion, exposing a high level of control over prompts, references, masks, control nets, and temporal behaviour. While this flexibility is extremely powerful, embedding or tightly coupling ComfyUI processes into Nuke scripts can introduce performance and workflow issues. AI generation is computationally expensive, often GPU-bound, and inherently iterative. Running these processes alongside a comp can slow script evaluation, increase complexity, and make shots harder to version, debug, and hand off—especially in production environments where stability and repeatability are critical.

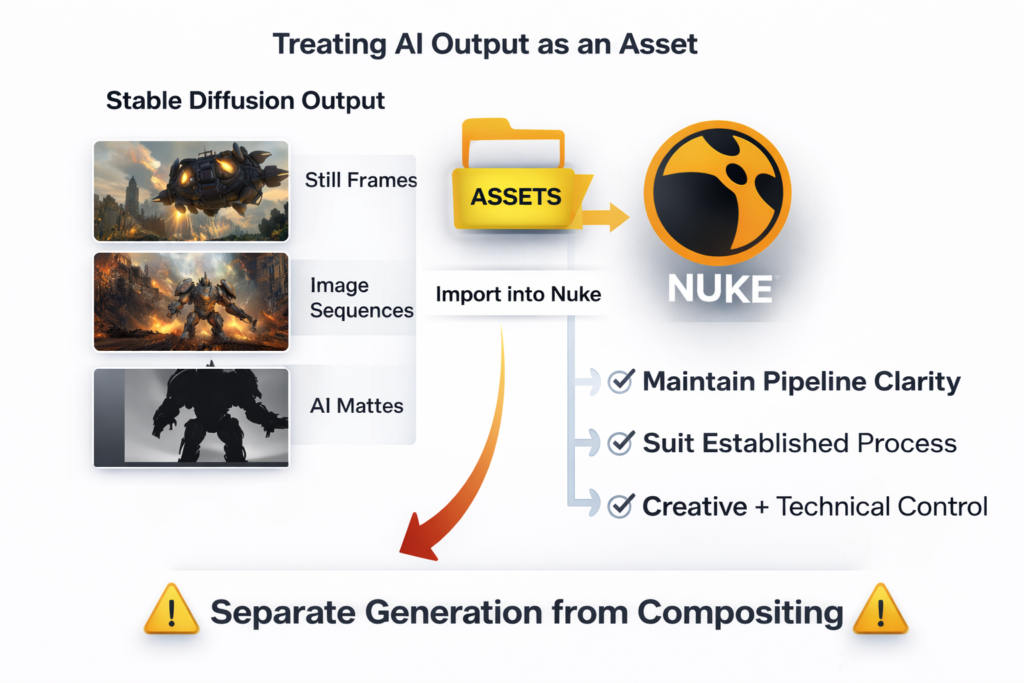

3. Treating AI Output as an Asset

For these reasons, it is more effective to treat Stable Diffusion output as a generated asset rather than a live process inside Nuke.

AI is used upstream to create images, sequences, textures, or mattes, which are then published and imported into Nuke like any other element. This keeps Nuke scripts clean, deterministic, and fast, while allowing AI exploration to remain flexible and experimental. By separating generation from compositing, we maintain a clear pipeline, preserve creative control, and integrate AI naturally into established VFX workflows.